Batching equivariant matrices

This notebook introduces you to one aspect of generating matrices that you will inevitably face when training a model: batching.

Prerequisites

Before reading this notebook, make sure you have read the notebook on computing a matrix, which introduces all of the most basic concepts of graph2mat that we are going to assume are already known. Also we will use exactly the same setup, with the only difference that we will compute two matrices at the same time instead of just one.

[1]:

import numpy as np

# So that we can plot sisl geometries

import sisl.viz

from e3nn import o3

from graph2mat import (

PointBasis,

BasisTableWithEdges,

BasisConfiguration,

MatrixDataProcessor,

)

from graph2mat.bindings.torch import TorchBasisMatrixData, TorchBasisMatrixDataset

from graph2mat.bindings.e3nn import E3nnGraph2Mat

from graph2mat.tools.viz import plot_basis_matrix

/home/pfebrer/miniforge3/envs/graph2mat/lib/python3.11/site-packages/torch/__config__.py:9: UserWarning: CUDA initialization: CUDA unknown error - this may be due to an incorrectly set up environment, e.g. changing env variable CUDA_VISIBLE_DEVICES after program start. Setting the available devices to be zero. (Triggered internally at /pytorch/c10/cuda/CUDAFunctions.cpp:109.)

return torch._C._show_config()

The matrix-computing function

As we have already seen in the notebook on computing a matrix, we need to define a basis, a basis table, a data processor and the shape of the node features. With all this, we can initialize the matrix-computing function. We define everything exactly as in the other notebook:

[2]:

# The basis

point_1 = PointBasis("A", R=2, basis="0e", basis_convention="spherical")

point_2 = PointBasis("B", R=5, basis="2x0e + 1o", basis_convention="spherical")

basis = [point_1, point_2]

# The basis table.

table = BasisTableWithEdges(basis)

# The data processor.

processor = MatrixDataProcessor(

basis_table=table, symmetric_matrix=True, sub_point_matrix=False

)

# The shape of the node features.

node_feats_irreps = o3.Irreps("0e + 1o")

# The fake environment representation function that we will use

# to compute node features.

def get_environment_representation(data, irreps):

"""Function that mocks a true calculation of an environment representation.

Computes a random array and then ensures that the numbers obey our particular

system's symmetries.

"""

node_features = irreps.randn(data.num_nodes, -1)

# The point in the middle sees the same in -X and +X directions

# therefore its representation must be 0.

# In principle the +/- YZ are also equivalent, but let's say that there

# is something breaking the symmetry to make the numbers more interesting.

# Note that the spherical harmonics convention is YZX.

node_features[1, 3] = 0

# We make both A points have equivalent features except in the X direction,

# where the features are opposite

node_features[2::3, :3] = node_features[0::3, :3]

node_features[2::3, 3] = -node_features[0::3, 3]

return node_features

# The matrix readout function

model = E3nnGraph2Mat(

unique_basis=basis,

irreps=dict(node_feats_irreps=node_feats_irreps),

symmetric=True,

preprocessing_edges=None,

)

Creating two configurations

Now, we will create two configurations instead of one. Both will have the same coordinates, the only difference will be that we will swap the point types. However, you could give different coordinates to each of them as well, or a different number of atoms.

We’ll store both configurations in a configs list.

[3]:

positions = np.array([[0, 0, 0], [6.0, 0, 0], [12, 0, 0]])

config1 = BasisConfiguration(

point_types=["A", "B", "A"],

positions=positions,

basis=basis,

cell=np.eye(3) * 100,

pbc=(False, False, False),

)

config2 = BasisConfiguration(

point_types=["B", "A", "B"],

positions=positions,

basis=basis,

cell=np.eye(3) * 100,

pbc=(False, False, False),

)

configs = [config1, config2]

As we did in the other notebook, we plot the configurations to see how they look like, and visualize the overlaps:

[4]:

geom1 = config1.to_sisl_geometry()

geom1.plot(show_cell=False, atoms_style={"size": geom1.maxR(all=True)}).update_layout(

title="Config 1"

).show()

geom2 = config2.to_sisl_geometry()

geom2.plot(show_cell=False, atoms_style={"size": geom2.maxR(all=True)}).update_layout(

title="Config 2"

).show()

Data type cannot be displayed: application/vnd.plotly.v1+json

INFO nodify.node.133912277412304:node.py:get()- Evaluated because inputs changed.

INFO nodify.node.133912277399440:node.py:get()- Evaluated because inputs changed.

INFO nodify.node.133912277431568:node.py:get()- Evaluated because inputs changed.

INFO nodify.node.133912277398288:node.py:get()- Evaluated because inputs changed.

INFO nodify.node.133912277431248:node.py:get()- Evaluated because inputs changed.

INFO nodify.node.133912277430736:node.py:get()- Evaluated because inputs changed.

INFO nodify.node.133912277399440:node.py:get()- No need to evaluate

INFO nodify.node.133912277431568:node.py:get()- No need to evaluate

INFO nodify.node.133912277398288:node.py:get()- No need to evaluate

INFO nodify.node.133912277431248:node.py:get()- No need to evaluate

INFO nodify.node.133912277430736:node.py:get()- No need to evaluate

INFO nodify.node.133912277399440:node.py:get()- No need to evaluate

INFO nodify.node.133912277400528:node.py:get()- Evaluated because inputs changed.

INFO nodify.node.133912486005392:node.py:get()- Evaluated because inputs changed.

INFO nodify.node.133912473311184:node.py:get()- Evaluated because inputs changed.

INFO nodify.node.133912277399504:node.py:get()- Evaluated because inputs changed.

INFO nodify.node.133912277420240:node.py:get()- Evaluated because inputs changed.

INFO nodify.node.133912277422544:node.py:get()- Evaluated because inputs changed.

INFO nodify.node.133912277434064:node.py:get()- Evaluated because inputs changed.

INFO nodify.node.133912277135376:node.py:get()- Evaluated because inputs changed.

INFO nodify.node.133912277433744:node.py:get()- Evaluated because inputs changed.

INFO nodify.node.133912277433424:node.py:get()- Evaluated because inputs changed.

INFO nodify.node.133912277393104:node.py:get()- Evaluated because inputs changed.

INFO nodify.node.133912277408080:node.py:get()- Evaluated because inputs changed.

INFO nodify.node.133912277430032:node.py:get()- Evaluated because inputs changed.

INFO nodify.node.133912277399504:node.py:get()- No need to evaluate

INFO nodify.node.133912277420240:node.py:get()- No need to evaluate

INFO nodify.node.133912277429328:node.py:get()- Evaluated because inputs changed.

INFO nodify.node.133912474640400:node.py:get()- Evaluated because inputs changed.

INFO nodify.node.133912277428112:node.py:get()- Evaluated because inputs changed.

INFO nodify.node.133916200261840:node.py:get()- Evaluated because inputs changed.

INFO nodify.node.133912277399504:node.py:get()- No need to evaluate

INFO nodify.node.133912277420240:node.py:get()- No need to evaluate

INFO nodify.node.133912277422544:node.py:get()- No need to evaluate

INFO nodify.node.133912277409616:node.py:get()- Evaluated because inputs changed.

INFO nodify.node.133912277427088:node.py:get()- Evaluated because inputs changed.

INFO nodify.node.133912277426576:node.py:get()- Evaluated because inputs changed.

INFO nodify.node.133912277399504:node.py:get()- No need to evaluate

INFO nodify.node.133912277420240:node.py:get()- No need to evaluate

INFO nodify.node.133912277422544:node.py:get()- No need to evaluate

INFO nodify.node.133912277422864:node.py:get()- Evaluated because inputs changed.

INFO nodify.node.133916200256976:node.py:get()- Evaluated because inputs changed.

INFO nodify.node.133912277427984:node.py:get()- Evaluated because inputs changed.

INFO nodify.node.133912277399440:node.py:get()- No need to evaluate

INFO nodify.node.133912277395792:node.py:get()- Evaluated because inputs changed.

INFO nodify.node.133912277608912:node.py:get()- Evaluated because inputs changed.

INFO nodify.node.133912277485968:node.py:get()- Evaluated because inputs changed.

INFO nodify.node.133912474362192:node.py:get()- Evaluated because inputs changed.

INFO nodify.node.133916200202320:node.py:get()- Evaluated because inputs changed.

INFO nodify.node.133916200260176:node.py:get()- Evaluated because inputs changed.

INFO nodify.node.133912475420240:node.py:get()- Evaluated because inputs changed.

INFO nodify.node.133912277399440:node.py:get()- No need to evaluate

INFO nodify.node.133912277400528:node.py:get()- No need to evaluate

INFO nodify.node.133912486005392:node.py:get()- No need to evaluate

INFO nodify.node.133916200260944:node.py:get()- Evaluated because inputs changed.

INFO nodify.node.133912277415888:node.py:get()- Evaluated because inputs changed.

INFO nodify.node.133912277408080:node.py:get()- No need to evaluate

INFO nodify.node.133912277416912:node.py:get()- Evaluated because inputs changed.

INFO nodify.node.133912277419152:node.py:get()- Evaluated because inputs changed.

INFO nodify.node.133912277399504:node.py:get()- No need to evaluate

INFO nodify.node.133912277420240:node.py:get()- No need to evaluate

INFO nodify.node.133912277410512:node.py:get()- Evaluated because inputs changed.

INFO nodify.node.133912277420048:node.py:get()- Evaluated because inputs changed.

INFO nodify.node.133912277393744:node.py:get()- Evaluated because inputs changed.

INFO nodify.node.133912277399504:node.py:get()- No need to evaluate

INFO nodify.node.133912277420240:node.py:get()- No need to evaluate

INFO nodify.node.133912277422544:node.py:get()- No need to evaluate

INFO nodify.node.133912277408784:node.py:get()- Evaluated because inputs changed.

INFO nodify.node.133912277422288:node.py:get()- Evaluated because inputs changed.

INFO nodify.node.133912277420560:node.py:get()- Evaluated because inputs changed.

INFO nodify.node.133912277399504:node.py:get()- No need to evaluate

INFO nodify.node.133912277420240:node.py:get()- No need to evaluate

INFO nodify.node.133912277422544:node.py:get()- No need to evaluate

INFO nodify.node.133912277422864:node.py:get()- No need to evaluate

INFO nodify.node.133912277396944:node.py:get()- Evaluated because inputs changed.

INFO nodify.node.133912277397520:node.py:get()- Evaluated because inputs changed.

INFO nodify.node.133912277399504:node.py:get()- No need to evaluate

INFO nodify.node.133912277420240:node.py:get()- No need to evaluate

INFO nodify.node.133912277422544:node.py:get()- No need to evaluate

INFO nodify.node.133912277425808:node.py:get()- Evaluated because inputs changed.

INFO nodify.node.133912277425488:node.py:get()- Evaluated because inputs changed.

INFO nodify.node.133912277399440:node.py:get()- No need to evaluate

INFO nodify.node.133912277399504:node.py:get()- No need to evaluate

INFO nodify.node.133912277420240:node.py:get()- No need to evaluate

INFO nodify.node.133912277422544:node.py:get()- No need to evaluate

INFO nodify.node.133912277439888:node.py:get()- Evaluated because inputs changed.

INFO nodify.node.133912277403792:node.py:get()- Evaluated because inputs changed.

INFO nodify.node.133912277438160:node.py:get()- Evaluated because inputs changed.

INFO nodify.node.133912277399504:node.py:get()- No need to evaluate

INFO nodify.node.133912277420240:node.py:get()- No need to evaluate

INFO nodify.node.133912277407440:node.py:get()- Evaluated because inputs changed.

INFO nodify.node.133912277410512:node.py:get()- No need to evaluate

INFO nodify.node.133912277438928:node.py:get()- Evaluated because inputs changed.

INFO nodify.node.133912277399440:node.py:get()- No need to evaluate

INFO nodify.node.133912277395792:node.py:get()- No need to evaluate

INFO nodify.node.133916200202320:node.py:get()- No need to evaluate

INFO nodify.node.133916200260176:node.py:get()- No need to evaluate

INFO nodify.node.133912475420240:node.py:get()- No need to evaluate

INFO nodify.node.133912277399440:node.py:get()- No need to evaluate

INFO nodify.node.133912277400528:node.py:get()- No need to evaluate

INFO nodify.node.133912486005392:node.py:get()- No need to evaluate

INFO nodify.node.133916200260944:node.py:get()- No need to evaluate

INFO nodify.node.133912277415888:node.py:get()- No need to evaluate

INFO nodify.node.133912277408080:node.py:get()- No need to evaluate

INFO nodify.node.133912277416912:node.py:get()- No need to evaluate

INFO nodify.node.133912277419152:node.py:get()- No need to evaluate

INFO nodify.node.133912277399504:node.py:get()- No need to evaluate

INFO nodify.node.133912277420240:node.py:get()- No need to evaluate

INFO nodify.node.133912277410512:node.py:get()- No need to evaluate

INFO nodify.node.133912277420048:node.py:get()- No need to evaluate

INFO nodify.node.133912277399440:node.py:get()- No need to evaluate

INFO nodify.node.133912277411408:node.py:get()- Evaluated because inputs changed.

INFO nodify.node.133912277399440:node.py:get()- No need to evaluate

INFO nodify.node.133912277400528:node.py:get()- No need to evaluate

INFO nodify.node.133912486005392:node.py:get()- No need to evaluate

INFO nodify.node.133912277399504:node.py:get()- No need to evaluate

INFO nodify.node.133912277420240:node.py:get()- No need to evaluate

INFO nodify.node.133912277422544:node.py:get()- No need to evaluate

INFO nodify.node.133912277399504:node.py:get()- No need to evaluate

INFO nodify.node.133912277420240:node.py:get()- No need to evaluate

INFO nodify.node.133912277442256:node.py:get()- Evaluated because inputs changed.

INFO nodify.node.133912277408080:node.py:get()- No need to evaluate

INFO nodify.node.133912474087120:node.py:get()- Evaluated because inputs changed.

INFO nodify.node.133912277427024:node.py:get()- Evaluated because inputs changed.

INFO nodify.node.133912277440208:node.py:get()- Evaluated because inputs changed.

INFO nodify.node.133916200203344:node.py:get()- Evaluated because inputs changed.

Data type cannot be displayed: application/vnd.plotly.v1+json

Build a dataset

With all our configurations, we can create a dataset. The specific class that does this is the TorchBasisMatrixDataset, which apart from the configurations needs the data processor as usual.

[5]:

dataset = TorchBasisMatrixDataset(configs, data_processor=processor)

This dataset contains all the configurations. We now just need some tool to create batches from it.

Batching with a DataLoader

TorchBasisMatrixDataset is just an extension of torch.utils.data.Dataset. Therefore, you don’t need a graph2mat specific tool to create batches. In fact, we recommend that you use torch_geometric’s DataLoader:

[6]:

from torch_geometric.loader import DataLoader

Everything that you need to do is: pass the dataset and specify some batch size.

[7]:

loader = DataLoader(dataset, batch_size=2)

In this case we use a batch size of 2, which is our total number of configurations. Therefore, we will only have one batch.

Let’s loop through the batches (only 1) and print them:

[8]:

for data in loader:

print(data)

TorchBasisMatrixDataBatch(

metadata={ data_processor=[2] },

edge_index=[2, 8],

num_nodes=6,

neigh_isc=[8],

n_edges=[2],

positions=[6, 3],

shifts=[8, 3],

cell=[6, 3],

nsc=[2, 3],

node_attrs=[6, 2],

point_types=[6],

edge_types=[8],

batch=[6],

ptr=[3]

)

Calling the function

We now have our batch object, data. It is a Batch object. In the previous notebook, we called the function from a BasisMatrixTorchData object. One might think that having batched data might make it more complicated to call the function.

However, it is exactly the same code that you have to use to compute matrices in a batch. First, of course, we need to get our inputs, which we generate artificially here (in the batch we have 6 nodes, each of them needs a scalar and a vector):

[9]:

node_inputs = get_environment_representation(data, node_feats_irreps)

node_inputs

[9]:

tensor([[ 0.6927, 0.5388, 0.2382, -1.2004],

[-0.2367, -0.5671, 0.2236, 0.0000],

[ 0.6927, 0.5388, 0.2382, 1.2004],

[-0.6700, 1.0264, -2.2031, 0.3307],

[-0.3570, 2.0368, 0.4811, 0.4526],

[-0.6700, 1.0264, -2.2031, -0.3307]])

And from them, we compute the matrices. We use the inputs as well as the preprocessed data in the batch, with exactly the same code that we have already seen:

[10]:

node_labels, edge_labels = model(data, node_feats=node_inputs)

Disentangling the batch

As simple as it is to run a batched calculation, disentangling everything back into individual cases is harder. It is even harder in our case, in which we have batched sparse matrices.

Not only you have to handle the indices of the sparsity pattern, but also the additional aggregation of the batches. This is the reason why in the BasisMatrixData objects you can see so many pointer arrays. They are needed to keep track of the organization.

Making use of those indices, the data processor can disentangle the batch and give you the individual cases. You’ll be happy to see that you can call the matrix_from_data method of the processor, just as you did with the single matrix case, and it will return a tuple of matrices instead of just one:

[11]:

matrices = processor.matrix_from_data(

data,

predictions={"node_labels": node_labels, "edge_labels": edge_labels},

)

matrices

[11]:

(<Compressed Sparse Row sparse array of dtype 'float32'

with 47 stored elements and shape (7, 7)>,

<Compressed Sparse Row sparse array of dtype 'float32'

with 71 stored elements and shape (11, 11)>)

Note

matrix_from_data has automatically detected that the data passed was a torch_geometric’s Batch object. There’s also the is_batch argument to explicitly indicate if it is a batch or not. Also, the processor has the yield_from_batch method, which is more explicit and will return a generator instead of a tuple, which is better for very big matrices if you want to process them individually.

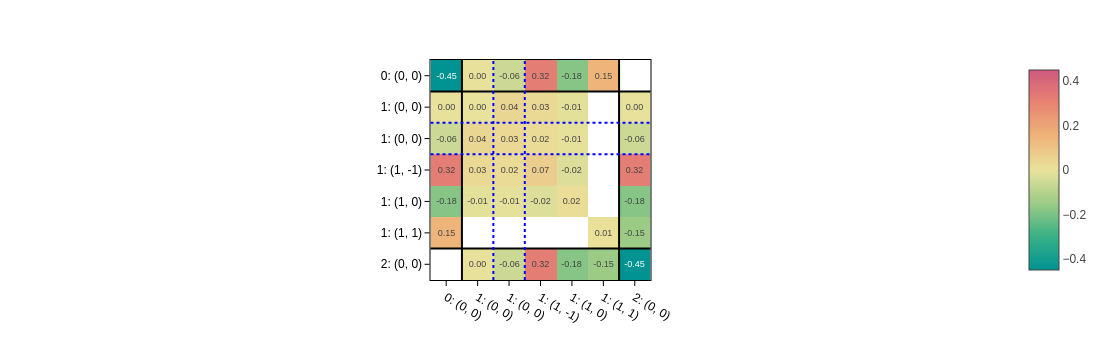

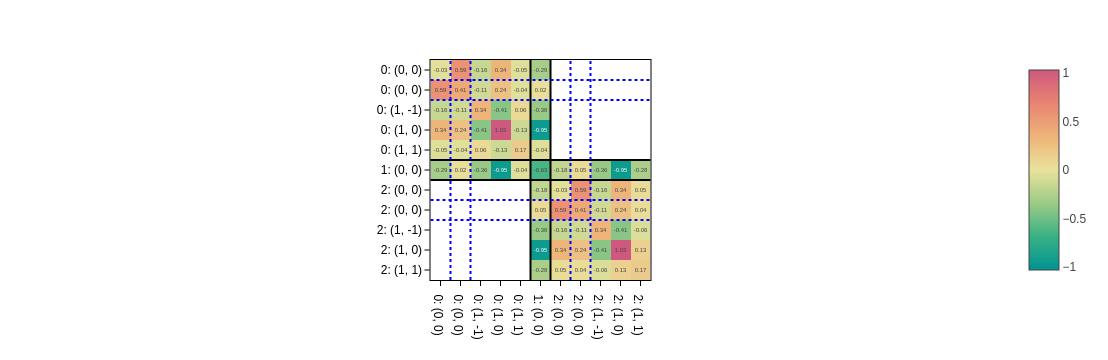

As we already did in the previous notebook, we can plot the matrices:

[12]:

for config, matrix in zip(configs, matrices):

plot_basis_matrix(

matrix,

config,

point_lines={"color": "black"},

basis_lines={"color": "blue"},

colorscale="temps",

text=".2f",

basis_labels=True,

).show()

Try to relate the matrices to the systems we created and see if their shape makes sense :)

Summary and next steps

In this notebook we learned how to batch systems and then use the data processor to unbatch them.

The next steps could be:

Understanding how to train the function to produce the target matrix. See this notebook.

Combining this function with other modules for your particular application.